How Multimodal AI Is Changing Human AI Interaction

Find out how businesses use Multimodal AI services to improve human-AI communication and streamline workflows with text, voice, images, and video

Imagine talking to your computer and having it not just understand your words, but also your gestures, the images you show it, or even the tone of your voice.

But what exactly is Multimodal AI?

How does it bring together all these different kinds of information? And why is it becoming so important for industries like healthcare, online shopping, and education? Understanding this technology helps us see how the way humans and AI work together is changing.

What Is Multimodal AI?

Multimodal AI is a type of artificial intelligence designed to understand and process information from multiple sources, or “modalities”, simultaneously. These modalities can include

-

Text – Written content like emails, documents, or social media posts.

-

Images – Photos, screenshots, or visual data.

-

Audio – Spoken words, music, or sounds.

-

Video – Moving images combining both visual and audio information.

-

Sensor Data – For example, motion sensors or IoT devices.

Traditional AI systems usually work with a single type of input. For example, chatbots mostly process text, and image recognition software only looks at images. Multi-sensor AI combines all these inputs, giving machines a richer understanding of the world, much closer to how humans perceive it.

Think of it like this: if traditional AI is a person who can only read, Multi-sensor AI is someone who can read, listen, see, and even understand gestures, all at the same time.

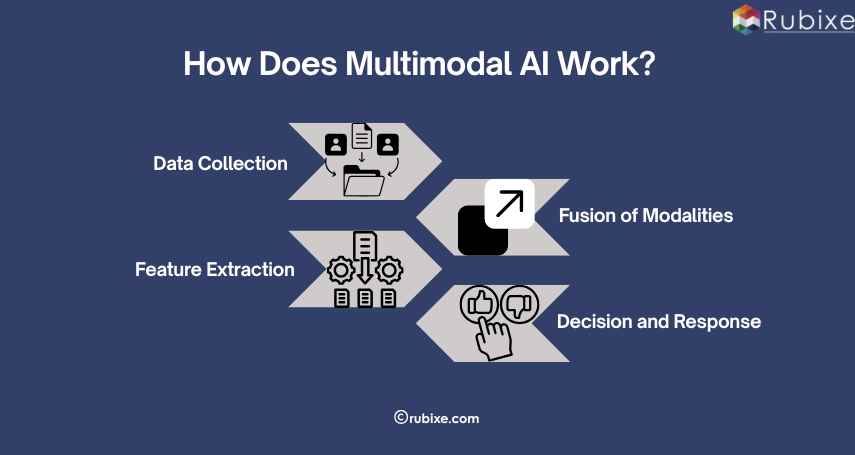

How Does Multimodal AI Work?

The power of Multi-sensor AI lies in its ability to integrate multiple data streams into one cohesive understanding. Here’s a simplified breakdown:

The power of Multi-sensor AI lies in its ability to integrate multiple data streams into one cohesive understanding. Here’s a simplified breakdown:

1. Data Collection

The AI collects data from different sources. For example, in a video call:

-

Audio: What the user says

-

Video: Facial expressions and gestures

-

Text: Chat messages

2. Feature Extraction

Each modality is processed separately to extract useful information. For instance:

-

Audio can reveal sentiment or emotion.

-

Video can detect attention, engagement, or actions.

-

Text can provide context and intent.

3. Fusion of Modalities

Next, the AI combines these insights. By correlating facial expressions with words or gestures with tone, it can understand the hidden details humans often express in communication.

4. Decision and Response

Finally, the AI produces a response or takes action based on the integrated data. This could be:

-

Recommending products based on spoken requests and visual preferences.

-

Detecting stress or confusion in online learning platforms.

-

Assisting in healthcare by analyzing patient speech, facial cues, and medical images together.

Examples of Multimodal AI in Action

Multi-sensor AI isn’t just a concept, it’s already reshaping the way we interact with technology. Here are some real-world examples:

-

Customer Support: Companies now use AI that can understand both text and voice simultaneously. Imagine calling a helpline: the AI not only listens to your words but also analyzes your tone to detect frustration, then prioritizes urgent queries.

-

Healthcare: AI can analyze medical images, patient history, and spoken symptoms together to provide faster and more accurate diagnoses. For example, some cancer detection tools use multimodal analysis to combine MRI scans with patient records.

-

Virtual Assistants: Next-gen assistants like advanced versions of Siri or Alexa are starting to combine voice, text, and contextual cues to respond more naturally, such as suggesting reminders based on calendar scans and recent conversations.

-

Education and E-Learning: AI tutors can now watch students through a webcam, listen to their questions, and read their typed responses to provide personalized help. It’s like having a real tutor who understands not just what you ask, but how you feel while asking it.

Benefits of Multimodal AI

Multi-sensor AI is not just a technological upgrade - it changes the quality of human-AI interaction. Some of the main benefits include:

|

Benefit |

How It Helps Users & Businesses |

|

Better Understanding |

By processing multiple types of input, AI can understand context, sentiment, and intent more accurately. |

|

Natural Interaction |

Users can communicate with AI using voice, text, images, or gestures, making it more human-like. |

|

Efficiency & Speed |

Decisions can be faster because the AI can consider all relevant information at once. |

|

Personalization |

Recommendations, responses, and support become more tailored to individual needs. |

|

Accessibility |

Multimodal AI helps people with disabilities by allowing multiple ways to interact with technology. |

How Businesses Can Use Multimodal AI

Multi-sensor AI is particularly transformative for businesses looking to improve user experience, reduce bounce rates, and increase engagement. Here’s how:

-

E-Commerce: AI can analyze product images, user reviews, and search queries together to recommend products more accurately. Visual search features allow users to upload images and find similar products instantly.

-

Marketing and Content: AI tools can analyze engagement across videos, social media posts, and customer comments to suggest content that resonates better. Personalized campaigns can adapt to multiple user signals, increasing click-through rates.

-

Customer Experience: Chatbots and virtual assistants powered by Multi-sensor AI respond faster and more naturally. By detecting frustration or confusion through voice or text analysis, businesses can intervene proactively, reducing churn.

-

Healthcare and Wellness: By combining a patient’s speech, medical imaging like X-rays or MRIs, and data from wearable devices such as heart rate monitors or fitness trackers, Multi-sensor AI can get a much clearer and complete picture of a patient’s health.

-

Education & Training: Multi-sensor AI is changing the way students learn and teachers teach. AI tutors can understand multiple signals from students, such as the questions they ask, their facial expressions, and even their tone of voice. This allows AI to adapt lessons in real time to match each student’s pace and level of understanding.

Challenges of Multimodal AI

While promising, Multimodal AI comes with challenges:

1. Data Complexity

Handling multiple types of data, like text, images, audio, and video, all at the same time, is not easy. Each type of data has its own structure and meaning. For example, analyzing a picture is very different from understanding a sentence or recognizing a voice tone.

2. Privacy Concerns

Since Multimodal AI often uses sensitive information, like your voice, video, or personal messages, there are real privacy and security risks. If this data is not protected, it can be accessed by hackers or misused by companies. Businesses need to follow strict data protection rules and make sure users know how their information is being used.

3. Bias

AI learns from the data it is given. If the training data is not diverse or representative, the AI can make mistakes or treat some groups unfairly. For example, an AI that recognizes faces may work well for some skin tones but not for others if it was trained on limited data. This can lead to wrong predictions or decisions. Companies must use diverse and balanced data to make AI fair for everyone.

4. Integration

Adding Multi-sensor AI into existing business systems is not always simple. Companies need to combine it carefully with current tools and workflows, so it actually helps employees and customers, rather than confusing them. Poor integration can make systems harder to use, frustrate employees, or slow down processes instead of improving them.

Despite these challenges, the benefits for both businesses and consumers are significant, and advancements are quickly overcoming these limitations.

The Future of Human-AI Interaction

Multimodal AI is just the beginning. In the near future, AI could:

-

Understand emotions and intentions better, enabling empathetic interactions.

-

Translate speech, gestures, and facial expressions across languages in real time.

-

Assist in creative projects, combining text, audio, and visual suggestions seamlessly.

Ultimately, human-AI interaction will become more fluid, natural, and intuitive, similar to interacting with another human being rather than a machine.

Multimodal AI is changing the way we communicate with technology. By processing text, audio, images, and video together, it allows AI to understand context, emotion, and intent, making interactions smarter and more human-like.

For businesses, it offers a clear advantage: better customer engagement, personalized experiences, and reduced bounce rates. For individuals, it means more natural, intuitive, and accessible technology.

As this technology changes, learning to use Multimodal AI will no longer be optional; it will be essential for anyone looking to stay ahead.